reading-notes

https://faroukibrahim-fii.github.io/reading-notes/

Web Scraping

Web scraping is a technique to automatically access and extract large amounts of information from a website, which can save a huge amount of time and effort.

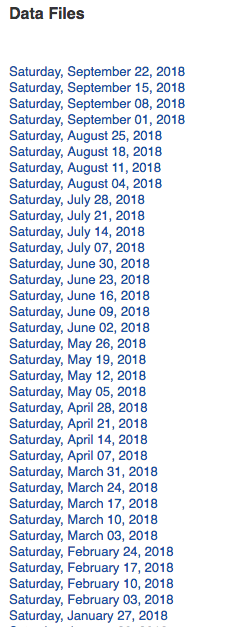

New York MTA Data

Turnstile data is compiled every week from May 2010 to present, so hundreds of .txt files exist on the site.

Inspecting the Website

The first thing that we need to do is to figure out where we can locate the links to the files we want to download inside the multiple levels of HTML tags.

Python Code

We start by importing the following libraries.

import requests

import urllib.request

import time

from bs4 import BeautifulSoup

Next, we set the url to the website and access the site with our requests library.

url = 'http://web.mta.info/developers/turnstile.html'

response = requests.get(url)

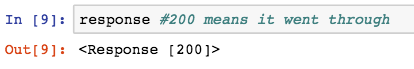

If the access was successful, you should see the following output:

This code saves the first text file, ‘data/nyct/turnstile/turnstile_180922.txt’ to our variable link. The full url to download the data is actually ‘http://web.mta.info/developers/data/nyct/turnstile/turnstile_180922.txt’ which I discovered by clicking on the first data file on the website as a test.

Last but not least, we should include this line of code so that we can pause our code for a second so that we are not spamming the website with requests.

time.sleep(1)

Techniques

Human copy-and-paste

The simplest form of web scraping is manually copying and pasting data from a web page into a text file or spreadsheet.

Text pattern matching

A simple yet powerful approach to extract information from web pages can be based on the UNIX grep

HTTP programming

Static and dynamic web pages can be retrieved by posting HTTP requests to the remote web server using socket programming.

HTML parsing

Many websites have large collections of pages generated dynamically from an underlying structured source like a database. Data of the same category are typically encoded into similar pages by a common script or template.

DOM parsing

By embedding a full-fledged web browser, such as the Internet Explorer or the Mozilla browser control, programs can retrieve the dynamic content generated by client-side scripts.

Vertical aggregation

There are several companies that have developed vertical specific harvesting platforms. These platforms create and monitor a multitude of “bots” for specific verticals with no “man in the loop” (no direct human involvement), and no work related to a specific target site.

Semantic annotation recognizing

The pages being scraped may embrace metadata or semantic markups and annotations, which can be used to locate specific data snippets.

Software

There are many software tools available that can be used to customize web-scraping solutions. This software may attempt to automatically recognize the data structure of a page or provide a recording interface that removes the necessity to manually write web-scraping code.

Respect Robots.txt

Web spiders should ideally follow the robot.txt file for a website while scraping. It has specific rules for good behavior, such as how frequently you can scrape, which pages allow scraping, and which ones you can’t. Some websites allow Google to scrape their websites, by not allowing any other websites to scrape.

Make the crawling slower, do not slam the server, treat websites nicely

Web scraping bots fetch data very fast, but it is easy for a site to detect your scraper, as humans cannot browse that fast. The faster you crawl, the worse it is for everyone.

Do not follow the same crawling pattern

Humans generally will not perform repetitive tasks as they browse through a site with random actions. Web scraping bots tend to have the same crawling pattern because they are programmed that way unless specified.

Make requests through Proxies and rotate them as needed

When scraping, your IP address can be seen. A site will know what you are doing and if you are collecting data.

Rotate User Agents and corresponding HTTP Request Headers between requests

A user agent is a tool that tells the server which web browser is being used. If the user agent is not set, websites won’t let you view content. Every request made from a web browser contains a user-agent header and using the same user-agent consistently leads to the detection of a bot. You can get your User-Agent by typing ‘what is my user agent’ in Google’s search bar.

Use a headless browser like Puppeteer, Selenium or Playwright

If none of the methods above works, the website must be checking if you are a REAL browser.

Beware of Honey Pot Traps

Honeypots are systems set up to lure hackers and detect any hacking attempts that try to gain information. It is usually an application that imitates the behavior of a real system.

Check if Website is Changing Layouts

Some websites make it tricky for scrapers, serving slightly different layouts.

For example, in a website pages 1-20 will display a layout, and the rest of the pages may display something else.

Avoid scraping data behind a login

Login is basically permission to get access to web pages. Some websites like Indeed do not allow permission.

If a page is protected by login, the scraper would have to send some information or cookies along with each request to view the page.

Use Captcha Solving Services

Many websites use anti web scraping measures. If you are scraping a website on a large scale, the website will eventually block you.

How can websites detect and block web scraping?

how-do-websites-detect-web-scraping

Websites can use different mechanisms to detect a scraper/spider from a normal user. Some of these methods are enumerated below:

- Unusual traffic/high download rate

- Repetitive tasks performed on the website in the same browsing pattern

- Checking if you are real browser

- Detection through honeypots

How do you find out if a website has blocked or banned you?

website-blocked-scraping

If any of the following signs appear on the site that you are crawling, it is usually a sign of being blocked or banned.

- CAPTCHA pages

- Unusual content delivery delays

- Frequent response with HTTP 404, 301 or 50x errors